|

|

Table of Contents

Introducction

In this very practical article, we will build two of the most interesting technologies in the Big Data ecosystem: Apache kudu and Apache Impala. We will build both technologies from source code, package them and deploy them with the minimum configurations to make them functional (we will avoid performance related configuration on this occasion) and additionally we will use Apache Impala making it as independent as possible from HDFS.

It is known that Apache Kudu and Apache Impala release their versions in “Source Release” form, therefore they do not make the binaries of these technologies available. This forces users to build such projects from source code. Generally the projects of the Big Data ecosystem and the Apache Software Foundation are based on Java code, which makes them easier to build and in particular to distribute (in most cases only a JVM is needed on the machine to be deployed).

In the case of Apache Kudu and Apache Impala this is not the case, they are two projects whose code base is C++, which makes their construction and in particular their distribution in the target systems somewhat more complicated.

Built Versions

To build the two technologies we will use the “main” branch of the two projects, the result is therefore the “versions to come”, they are development versions, in particular:

- Apache Kudu – 1.17.0-SNAPSHOT

- Apache Impala – 4.1.0-SNAPSHOT

For building the source code will use CentOS 7.x as development platform, thinking we are going to deploy the technologies into CentOS 7.x based hosts.

Building Kudu – 1.17.0-SNAPSHOT

sudo yum -y install autoconf automake curl cyrus-sasl-devel cyrus-sasl-gssapi \ cyrus-sasl-plain flex gcc gcc-c++ gdb git java-1.8.0-openjdk-devel \ krb5-server krb5-workstation libtool make openssl-devel patch pkgconfig \ redhat-lsb-core rsync unzip vim-common which centos-release-scl-rh \ devtoolset-8 tree vim

git clone https://github.com/apache/kudu kudu.git

cd kudu.git

build-support/enable_devtoolset.sh thirdparty/build-if-necessary.sh

mkdir -p build/release

cd build/release

../../build-support/enable_devtoolset.sh \

../../thirdparty/installed/common/bin/cmake \

-DCMAKE_BUILD_TYPE=release \

../..

make -j4

sudo mkdir /opt/kudu && sudo chown -R ${USER}: /opt/kudu

make DESTDIR=/opt/kudu install

In the case of Apache Kudu, thanks to the “make install” rule, it is easy to organize the artifacts and their dependencies for subsequent packaging and deployment. Let’s take a look at the generated artifacts.

/opt/kudu/

└── usr

└── local

├── share (lots of files)

├── include (losts of files)

├── bin

│ └── kudu (the CLI)

├── lib64

│ ├── libkudu_client.so -> libkudu_client.so.0

│ ├── libkudu_client.so.0 -> libkudu_client.so.0.1.0

│ └── libkudu_client.so.0.1.0

└── sbin

├── kudu-master

└── kudu-tserver

# We have to copy manually the document root front end:

cp -r kudu.git/www/ /opt/kudu/usr/local/

# We have to copy HMS Kudu plugin manually:

mkdir /opt/usr/local/lib

cp kudu.git/build/release/bin/hms-plugin.jar /opt/kudu/usr/local/lib

Packaging

rm -fr /opt/kudu/usr/local/share rm -fr /opt/kudu/usr/local/include tar cvzf kudu-1.17.0-SNAPSHOT.tar.gz /opt/kudu (514 MB compressed)

Installation

All Machines

scp kudu-1.17.0-SNAPSHOT.tar.gz <all-nodes>: sudo yum install cyrus-sasl-gssapi cyrus-sasl-plain cyrus-sasl-devel krb5-server krb5-workstation openssl lzo-devel tzdata sudo mkdir -p /var/lib/kudu/wal sudo mkdir -p /var/lib/kudu/data sudo mkdir -p /var/log/kudu sudo adduser -r kudu sudo chown -R kudu: /var/lib/kudu sudo chown -R kudu: /var/log/kudu sudo tar xvzf kudu-1.17.0-SNAPSHOT.tar.gz -C / sudo mkdir /opt/kudu/conf sudo chown -R kudu: /opt/kudu/

Single Kudu Master Machine

cat << EOF | sudo tee /opt/kudu/conf/master.gflagfile --webserver_doc_root=/opt/kudu/usr/local/www --log_dir=/var/log/kudu --fs_wal_dir=/var/lib/kudu/wal --fs_data_dirs=/var/lib/kudu/data --rpc_encryption=optional --rpc_authentication=optional --rpc_negotiation_timeout_ms=5000 EOF sudo chown kudu: /opt/kudu/conf/master.gflagfile cat << EOF | sudo tee /etc/systemd/system/kudu-master.service [Unit] Description=Apache Kudu Master Server Documentation=http://kudu.apache.org [Service] Environment=KUDU_HOME=/var/lib/kudu ExecStart=/opt/kudu/usr/local/sbin/kudu-master --flagfile=/opt/kudu/conf/master.gflagfile TimeoutStopSec=5 Restart=on-failure User=kudu [Install] WantedBy=multi-user.target EOF sudo systemctl daemon-reload sudo systemctl start kudu-master sudo systemctl enable kudu-master

Kudu Tablet Server Machines

cat << EOF | sudo tee /opt/kudu/conf/tserver.gflagfile --tserver_master_addrs=kudu-master1.node.keedio.cloud:7051 --webserver_doc_root=/opt/kudu/usr/local/www --log_dir=/var/log/kudu --fs_wal_dir=/var/lib/kudu/wal --fs_data_dirs=/var/lib/kudu/data --rpc_encryption=optional --rpc_authentication=optional --rpc_negotiation_timeout_ms=5000 EOF sudo chown kudu: /opt/kudu/conf/tserver.gflagfile cat << EOF | sudo tee /etc/systemd/system/kudu-tserver.service [Unit] Description=Apache Kudu Tablet Server Documentation=http://kudu.apache.org [Service] Environment=KUDU_HOME=/var/lib/kudu ExecStart=/opt/kudu/usr/local/sbin/kudu-tserver --flagfile=/opt/kudu/conf/tserver.gflagfile TimeoutStopSec=5 Restart=on-failure User=kudu [Install] WantedBy=multi-user.target EOF sudo systemctl daemon-reload sudo systemctl start kudu-tserver sudo systemctl enable kudu-tserver

Building Impala – 4.1.0-SNAPSHOT

git clone https://github.com/apache/impala.git cd impala export IMPALA_HOME=$PWD ./bin/bootstrap_system.sh source ./bin/impala-config.sh ./buildall.sh -noclean -notests

Packaging

mkdir -p /opt/impala/lib64

mkdir /opt/impala/sbin

mkdir /opt/impala/lib

mkdir /opt/impala/bin

cp -r impala/www/ /opt/impala/

cp \

impala/toolchain/toolchain-packages-gcc7.5.0/gcc-7.5.0/lib64/libgcc_s.so.1 \

impala/toolchain/toolchain-packages-gcc7.5.0/gcc-7.5.0/lib64/libstdc++.so.6.0.24 \

/opt/impala/lib64

cp impala/toolchain/toolchain-packages-gcc7.5.0/kudu-67ba3cae45/debug/lib64/libkudu_client.so.0.1.0 /opt/impala/lib64/

cp -P impala/be/build/debug/service/* /opt/impala/sbin/

cp impala/fe/target/impala-frontend-4.1.0-SNAPSHOT.jar /opt/impala/lib

cp impala/fe/target/dependency/*.jar /opt/impala/lib

cp -r impala/shell/build/impala-shell-4.1.0-SNAPSHOT /opt/impala/bin/shell

cp -r impala/toolchain/cdp_components-23144489/apache-hive-3.1.3000.7.2.15.0-88-bin/ /opt/hive

cp -r impala/toolchain/cdp_components-23144489/hadoop-3.1.1.7.2.15.0-88/ /opt/hadoop

tar cvzf impala-4.1.0-SNAPSHOT.tar.gz \

-P /opt/impala/ \

-P /opt/hive/ \

-P /opt/hadoop/ # (1861 MB compressed)

Installation

All Machines

scp impala-4.1.0-SNAPSHOT.tar.gz <all-nodes>: sudo yum install -y java-1.8.0-openjdk-devel sudo adduser -r impala sudo mkdir /var/log/impala sudo chown -R impala: /var/log/impala sudo mkdir /opt/impala/conf sudo chown -R impala: /opt/impala/conf sudo tar xzf impala-4.1.0-SNAPSHOT.tar.gz -P -C / sudo chown -R impala: /opt/impala/ sudo tee /etc/ld.so.conf.d/impala.conf <<EOF /opt/impala/lib64 EOF

HMS In Master Machine

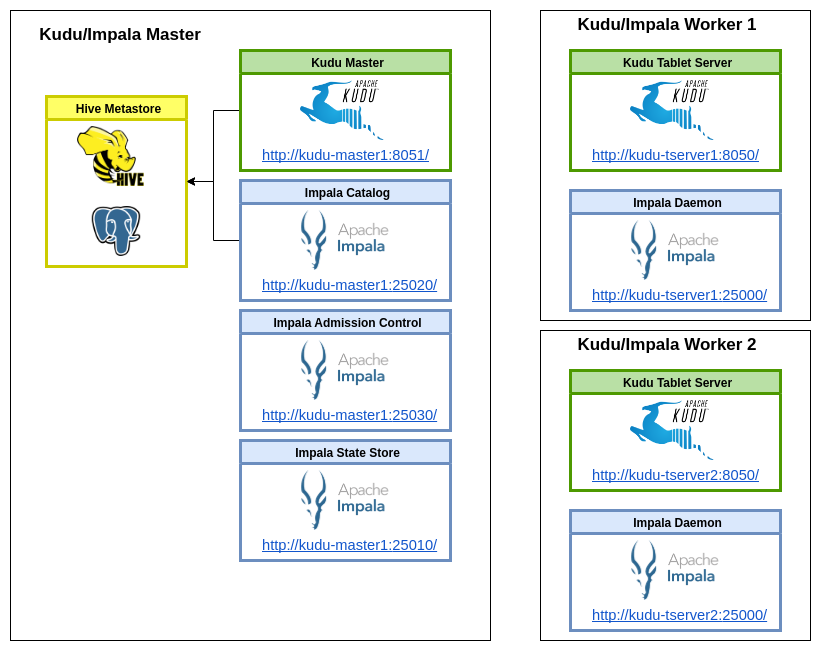

We install Hive Metastore on the Kudu and Impala Master (obviously it can be installed independently).

sudo yum install -y postgresql postgresql-server postgresql-contrib postgresql-jdbc sudo postgresql-setup initdb sudo sed -i "s/\#listen_addresses =.*/listen_addresses = \'*\'/g" /var/lib/pgsql/data/postgresql.conf echo 'host all all 0.0.0.0/0 md5' | sudo tee -a /var/lib/pgsql/data/pg_hba.conf sudo systemctl start postgresql sudo systemctl enable postgresql sudo -u postgres psql -c "ALTER USER postgres PASSWORD 'postgres';" sudo -u postgres psql -c "CREATE DATABASE metastore;" psql postgresql://postgres:postgres@kudu-master1.node.keedio.cloud -c '\l' | grep metastore sudo adduser -r hive sudo chown -R hive: /opt/hive/ sudo ln -s /usr/share/java/postgresql-jdbc.jar /opt/hive/lib/ sudo cp /opt/kudu/usr/local/lib/hms-plugin.jar /opt/hive/lib sudo chown hive: /opt/hive/lib/hms-plugin.jar sh impala-config.sh /opt/hive/bin/schematool -initSchema -dbType postgres sudo systemctl start metastore sudo systemctl start impala-statestore sudo systemctl start impala-catalog sudo systemctl start impala-admission sudo systemctl enable metastore sudo systemctl enable impala-statestore sudo systemctl enable impala-catalog sudo systemctl enable impala-admission

Multiple Impala Servers

The Impala Servers are only in the Kudu Tablet Servers nodes (the workers).

sudo adduser -r hive sudo chown -R hive: /opt/hive/ sudo ln -s /usr/share/java/postgresql-jdbc.jar /opt/hive/lib/ sudo cp /opt/kudu/usr/local/lib/hms-plugin.jar /opt/hive/lib sudo chown hive: /opt/hive/lib/hms-plugin.jar sh impala-config.sh sudo systemctl start impala sudo systemctl enable impala

impala-config.sh helper script

cat << EOF | sudo tee /etc/systemd/system/impala.service

[Unit]

Description=Apache Impala Daemon

Documentation=http://impala.apache.org

[Service]

EnvironmentFile=/opt/impala/conf/impala.env

ExecStart=/opt/impala/sbin/impalad --flagfile=/opt/impala/conf/impala.gflagfile

TimeoutStopSec=5

Restart=on-failure

User=impala

[Install]

WantedBy=multi-user.target

EOF

cat << EOF | sudo tee /etc/systemd/system/impala-catalog.service

[Unit]

Description=Apache Impala Catalog Daemon

Documentation=http://impala.apache.org

[Service]

EnvironmentFile=/opt/impala/conf/impala.env

ExecStart=/opt/impala/sbin/catalogd --flagfile=/opt/impala/conf/catalog.gflagfile

TimeoutStopSec=5

Restart=on-failure

User=impala

[Install]

WantedBy=multi-user.target

EOF

cat << EOF | sudo tee /etc/systemd/system/impala-statestore.service

[Unit]

Description=Apache Impala StateStore Daemon

Documentation=http://impala.apache.org

[Service]

EnvironmentFile=/opt/impala/conf/impala.env

ExecStart=/opt/impala/sbin/statestored --flagfile=/opt/impala/conf/statestore.gflagfile

TimeoutStopSec=5

Restart=on-failure

User=impala

[Install]

WantedBy=multi-user.target

EOF

cat << EOF | sudo tee /etc/systemd/system/impala-admission.service

[Unit]

Description=Apache Impala Admission Control Daemon

Documentation=http://impala.apache.org

[Service]

EnvironmentFile=/opt/impala/conf/impala.env

ExecStart=/opt/impala/sbin/admissiond --flagfile=/opt/impala/conf/admission.gflagfile

TimeoutStopSec=5

Restart=on-failure

User=impala

[Install]

WantedBy=multi-user.target

EOF

cat << EOF | sudo tee /opt/impala/conf/impala.env

IMPALA_HOME=/opt/impala

JAVA_HOME=/usr/lib/jvm/java/

CLASSPATH=/opt/impala/lib/*:/opt/hive/lib/*

HADOOP_HOME=/opt/hadoop

HIVE_HOME=/opt/hive

HIVE_CONF=/opt/hive/conf

EOF

sudo chown impala: /opt/impala/conf/impala.env

cat << EOF | sudo tee /opt/impala/conf/impala.gflagfile

--abort_on_config_error=false

--log_dir=/var/log/impala

--state_store_host=kudu-master1.node.keedio.cloud

--catalog_service_host=kudu-master1.node.keedio.cloud

--admission_service_host=kudu-master1.node.keedio.cloud

--kudu_master_hosts=kudu-master1.node.keedio.cloud

--enable_legacy_avx_support=true

EOF

sudo chown impala: /opt/impala/conf/impala.gflagfile

cat << EOF | sudo tee /opt/impala/conf/catalog.gflagfile

--kudu_master_hosts=kudu-master1.node.keedio.cloud

--log_dir=/var/log/impala

--enable_legacy_avx_support=true

EOF

sudo chown impala: /opt/impala/conf/catalog.gflagfile

cat << EOF | sudo tee /opt/impala/conf/statestore.gflagfile

--kudu_master_hosts=kudu-master1.node.keedio.cloud

--log_dir=/var/log/impala

--enable_legacy_avx_support=true

EOF

sudo chown impala: /opt/impala/conf/statestore.gflagfile

cat << EOF | sudo tee /opt/impala/conf/admission.gflagfile

--kudu_master_hosts=kudu-master1.node.keedio.cloud

--log_dir=/var/log/impala

--enable_legacy_avx_support=true

EOF

sudo chown impala: /opt/impala/conf/admission.gflagfile

cat << EOF | sudo tee /etc/systemd/system/metastore.service

[Unit]

Description=Apache Hive Metastore - HSM

Documentation=http://hive.apache.org

[Service]

Type=simple

Environment=JAVA_HOME=/usr/lib/jvm/java/

Environment=HADOOP_HOME=/opt/hadoop

ExecStart=/opt/hive/bin/hive --service metastore --hiveconf hive.root.logger=DEBUG,console

TimeoutStopSec=5

Restart=on-failure

User=hive

[Install]

WantedBy=multi-user.target

EOF

cat << EOF | sudo tee /opt/hive/conf/hive-site.xml

<configuration>

<property>

<name>hive.metastore.uris</name>

<value>thrift://kudu-master1.node.keedio.cloud:9083</value>

<description>Thrift URI for the remote metastore.

Used by metastore client to connect to remote metastore.

</description>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/var/lib/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>metastore.task.threads.always</name>

<value>org.apache.hadoop.hive.metastore.events.EventCleanerTask</value>

</property>

<property>

<name>metastore.expression.proxy</name>

<value>org.apache.hadoop.hive.metastore.DefaultPartitionExpressionProxy</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.postgresql.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:postgresql://kudu-master1.node.keedio.cloud:5432/metastore</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>postgres</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>postgres</value>

</property>

<property>

<name>hive.metastore.transactional.event.listeners</name>

<description>

KEY connection with KUDU from (artifact from Kudu release): hms-plugin.jar

</description>

<value>

org.apache.hive.hcatalog.listener.DbNotificationListener,

org.apache.kudu.hive.metastore.KuduMetastorePlugin

</value>

</property>

<property>

<name>hive.metastore.disallow.incompatible.col.type.changes</name>

<value>false</value>

</property>

<property>

<!-- Required for automatic metadata sync. -->

<name>hive.metastore.dml.events</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

<description>

Should metastore do authorization against database notification

related APIs such as get_next_notification.

If set to true, then only the superusers in proxy settings have the permission

</description>

</property>

</configuration>

EOF

cat << EOF | sudo tee /opt/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/cluster/nn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/cluster/1/dn,/cluster/2/dn</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

EOF

cat << EOF | sudo tee /opt/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>file://var/lib/hive</value>

<description>fake hdfs</description>

</property>

</configuration>

EOF

Enabling Kudu Sync with Metastore

Kudu has an optional feature which allows it to integrate its own catalog with the Hive Metastore (HMS). The HMS is the de-facto standard catalog and metadata provider in the Hadoop ecosystem. When the HMS integration is enabled, Kudu tables can be discovered and used by external HMS-aware tools (in our case the Impala Server).

Kudu has tight integration with Apache Impala, allowing you to use Impala to insert, query, update, and delete data from Kudu tablets using Impala’s SQL syntax, as an alternative to using the Kudu APIs to build a custom Kudu application. In addition, you can use JDBC or ODBC to connect existing or new applications written in any language, framework, or business intelligence tool to your Kudu data, using Impala as the broker.

cat /opt/kudu/conf/master.gflagfile --webserver_doc_root=/opt/kudu/usr/local/www --log_dir=/var/log/kudu --fs_wal_dir=/var/lib/kudu/wal --fs_data_dirs=/var/lib/kudu/data --rpc_encryption=optional --rpc_authentication=optional --rpc_negotiation_timeout_ms=5000 --hive_metastore_uris=thrift://kudu-master1.node.keedio.cloud:9083 sudo systemctl restart kudu-master

Final Architecture